Security Architects Need to be Wrong on the Internet

(So We Can Be Right When It Matters)

Reading Time: 5 minutes

The cybersecurity community desperately needs public experimentation, transparent failures, and open sharing of incomplete knowledge. This blog attempts to accelerate our collective learning as we move towards data maturity.

Start here → Philosophy (this post) | Problem | Solution | Implementation

Related Posts: Data Engineering Discovery • AI Threat Timeline • Living Literature Review

The Myth of the Cybersecurity Expert

There are no comprehensive experts in cybersecurity.

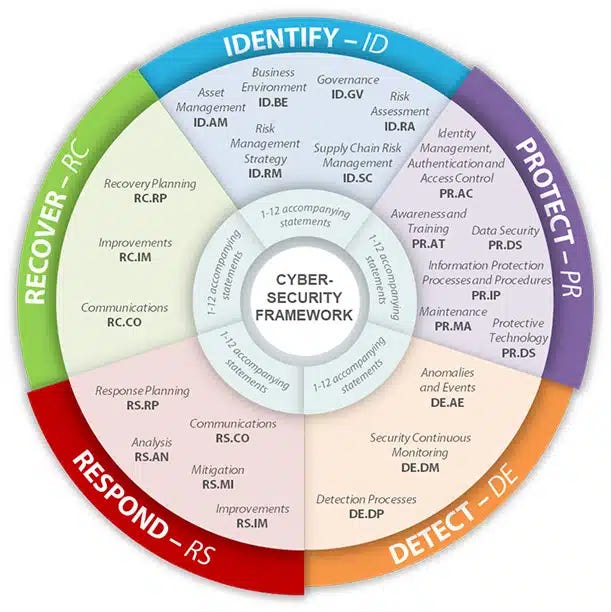

Let me be clear about what I mean: cybersecurity encompasses somewhere between 10 and 30 distinct domains (depends who’s counting).

No single person has mastered all of them.

Even if someone achieves depth across all domains—thinly plausible—the field moves too fast for static expertise to remain current. Technologies that didn’t exist five years ago are now critical infrastructure. Threat techniques evolve monthly. Regulatory frameworks are rewritten annually. Cloud platforms release new security-relevant services weekly.

Well-defined “best practice” is a moving target, perpetually obsolescent.

This creates an important problem: we desperately need people willing to share what they’re learning, what they’re trying, and—crucially—what isn’t working. But then we punish being wrong. Incomplete knowledge meets “well, actually” corrections.

So we remain silent. We keep our experiments private, our failures hidden, our incomplete understanding carefully concealed behind confident assertions about the narrow domains where we do have expertise.

And collectively, we move slower than we must.

The AI Imperative

Whether we like it or not, we are entering an era where AI will fundamentally change both offensive and defensive cybersecurity. Not in some distant, speculative future. Now.

Hostile actors are already leveraging AI to operate at machine speed with massive contextual complexity:

Machine-Speed Reconnaissance: AI systems can map attack surfaces, identify vulnerabilities, and select exploitation techniques faster than human defenders can detect the scanning.

Contextual Social Engineering: Large language models craft convincing phishing messages personalized to individual targets at scale, iterating based on responses in real-time.

Automated Exploitation: AI-guided exploitation tools chain vulnerabilities, adapt to defenses, and operate autonomously across distributed infrastructure.

Defensive Evasion: Machine learning models learn defensive patterns and automatically adapt attack techniques to evade detection, operating inside our OODA loops.

This isn’t hypothetical. These exist today, and (ready or not) they’ll rapidly improve. The gap between hostile uses of AI what most defensive cybersecurity operations can detect and respond to is widening.

More on the AI Imperative:

The Data Architecture Problem

Current cybersecurity data architectures are not ready to match the speed and complexity of hostile AI.

Most SOCs still operate on architectures designed for human-speed analysis:

Security data scattered across dozens of separate tools, each with proprietary data formats and carefully concealed technical debt

Detection rules written manually, deployed slowly, tested inadequately

Investigations conducted through sequential manual queries across multiple systems, each taking minutes to hours

Threat intelligence consumed as periodic manually curated reports rather than continuous data streams

Machine learning models, where they exist at all, operating on narrow subsets of available security data

These architectures were adequate when both attackers and defenders operated at human speed. They are increasingly inadequate when attackers operate at machine speed with AI assistance.

To deploy defensive AI capabilities that can match hostile AI—real-time anomaly detection across all security telemetry, automated investigation and response, mature threat hunting models (read: “data science”, but that’s a topic for another day), AI-guided incident response—we need fundamentally different data architectures.

We need modern data stack architectures capable of machine-speed analytics, massive contextual correlation, and continuous learning.

More on the Modern Data Stack:

What This Blog Covers (And Why)

“The future is here; it’s just not evenly distributed.”

- William Gibson, author of NeuromancerSome SOCs are succeeding already.

“It feels like I'm in a Star Destroyer looking at Planet of the Apes playing out below.”

- Snarky SOC manager, using AI tools that work for himWe just don’t know if it will work for us. Especially culturally.

I intend to gather and share from the organizations I encounter:

what they’re trying,

what’s working, and

what’s failing.

This will move us all faster than any individual expert could. We need that speed or we’ll fail as a whole — just in isolation.

More on Collaborating Discovery:

Structure

The blog publishes:

Technical Deep-Dives: Architecture patterns, technology comparisons, implementation details for hands-on architects and engineers

Implementation & Practice: Real-world challenges, cost optimization, operational lessons for SOC managers and security leaders

Expert Insights & Interviews: Thought leader perspectives, vendor validation, emerging trends for the broad security + data engineering community

Grounding

This blog will argue for particular architectural directions, making specific, opinionated arguments intending to point towards cybersecurity data maturity — and I will inevitably be wrong. So I’m adopting best practices to attempt to keep this work is grounded in:

Literature review: Comprehensive analysis of academic research on security data management, data lake architectures, and stream processing

Hypothesis testing: Systematic evaluation of specific claims about modern data stack capabilities through quantitative analysis

Industry validation: Case studies and expert interviews documenting real-world implementations

Intellectual honesty: Transparent documentation of contradictory evidence, limitations, and unresolved questions

Pragmatism

Technology discussions are frequently abstracted to the point of being useless. Authors are encouraged to use abstract concepts “to keep their work timeless” or to not offend any vendors who miss a mark.

Meanwhile, architects are desperate for practical advice.

I’m going to try something unusual here (and maybe a little too sexy to work well at first, but let’s try, right?):

Provide a security architecture MCP server that

1. builds arguments on a foundation of documented evidence,

2. lets you access the full research context to customize/update for your needs.Introducing the Security Architecture Decision Tool

From LIGER Stack to Implementation: Vendor Selection

An Invitation to Be Wrong Together

Here’s my request: if you find errors, contradictions, or better approaches than what I’ve documented, share them. Publicly. Write blog posts, create issues, post on social media, publish contradictory papers.

Be wrong on the Internet with me.

The cybersecurity community desperately needs more public experimentation, more transparent failure documentation, more open sharing of incomplete knowledge. We need to overcome the cultural pressure toward appearing expert and embrace the collaborative uncertainty that drives rapid learning.

The window to prepare our data architectures for machine-speed defense is closing. We don’t have time for slow, careful, private experimentation followed by eventual publication of perfected knowledge.

We need to try things, share results, fail publicly, learn collectively, and iterate rapidly.

Challenge assumptions. Share experiments.

Welcome to Security Data Commons.1

This is the opening post for Security Data Commons, launching October 2025.

Jeremy Wiley

October 2025

Next Post:

A Security Architect Walks Into a Data Engineering Conference

The realization hit me just as Joe Reis started talking at a data engineering event in 2023.

A Note on AI Assistance

This blog is written with substantial assistance from AI language models, particularly Claude (Anthropic). I view this not as a limitation but as appropriate practice for a blog about preparing for AI-enabled cybersecurity.

Claude assists with research synthesis, structural organization, technical explanations, and prose refinement. But the arguments, architectural recommendations, hypothesis frameworks, and evidence assessments are my own, grounded in my professional experience and research.

I believe the future of cybersecurity work involves defenders learning how to leverage AI collaboration. This blog attempts to model that collaboration transparently.